Commercial Outlook¶

Our commercial roadmap is driven by a single, overarching thesis: The Commodification of Robotics Software

We believe that for general-purpose robots to achieve mass adoption, software must transition from “custom R&D projects” to standardized, deployable assets. EMOS is the vehicle for this transition.

There are several assumptions in this simple thesis, you will find answers to some of them in the sections below and the FAQ.

Product Strategy¶

Our product strategy for EMOS operates on two levels: solving the hard technical problems that will enable standardization of intelligent automation behaviors (The Technology Play) and positioning EMOS as the indispensable infrastructure for the Physical AI era (The “Picks-and-Shovels” Play).

1. The Technology Play: The Missing Orchestration Layer¶

Building a true runtime for Physical AI is a deep engineering challenge that cannot be solved by simply wrapping model APIs. While the AI industry races to train larger foundation models, a critical vacuum exists in the infrastructure required to ground these models in the physical world. EMOS targets this “whitespace” We believe that this “orchestration layer”, is a frontier for massive technological innovation.

Build Adaptability Primitives¶

Component graphs emerge naturally in robotics applications. Historically, these graph definitions have been declarative and rigid (for example in ROS), because the robot performed a pre-defined task for its lifecycle. For general-purpose robots, the system needs to be dynamically configurable and have adaptability built into it.

With EMOS, we developed a beautiful, imperative API that allows the definition and launch of self-referential, adaptable (embodied-agentic) graphs where each component defines a functional unit and adaptability primitives Events, Actions and Fallbacks are fundamental building blocks. The simplicity of this API in EMOS is necessary for definition of automation behaviors as Recipes (Apps), where the recipe developer can trivially redefine the robots task and the adaptability required based on the task environment.

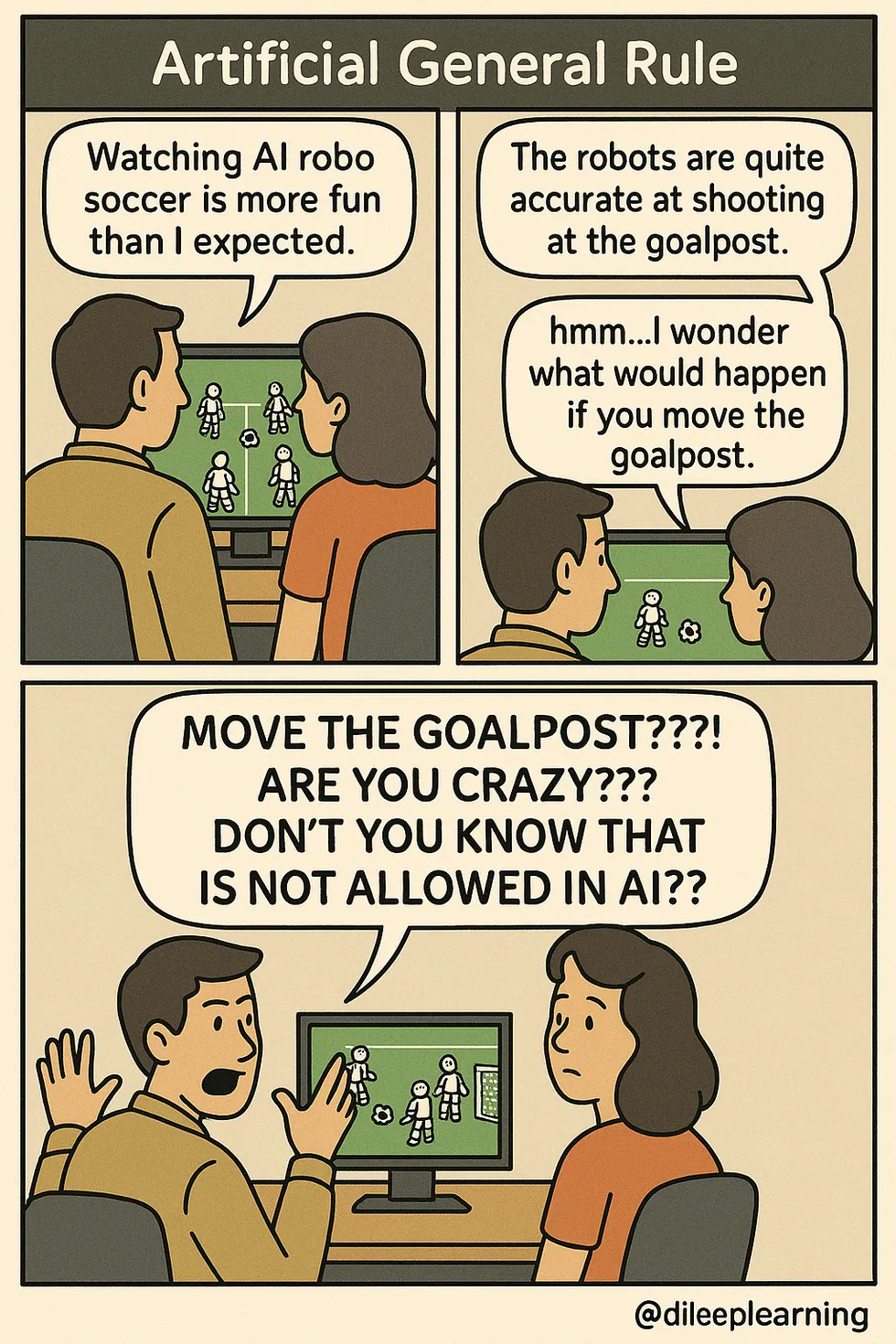

The Adaptability Imperative. Credit: AGI Comics by @dileeplearning.¶

Build Embodiment Primitives¶

Speaking more on the theme of enabling general-purpose robots to be general agents, one should note that embodiment adds certain obvious challenges which would not necessarily arise in digital agent frameworks.

For general-purpose robots to be general agents they require a sense of “self” and “history” that digital agents do not.

EMOS introduces Spatio-Temporal Semantic Memory, a referable, queryable world-state that persists across tasks. Current robots have logs, not memory. They merely record data for post-facto analysis. EMOS allows the robot to recall this data at runtime for task specific execution. This memory is currently implemented using vector DBs, which are clearly a first step towards, building hierarchial spatio-temporal representations and there is plenty of innovation potential for building better models (e.g. graphs inspired by hipocampal structure) for the general-purpose robots of the future.

Similarly, state management for long-running general-purpose agents is also an open problem which requires maintaining a complex state machine that the agent can argue over for adaptive its own behavior. EMOS provides these embodiment primitives so that more and more general behaviors can be automated in recipes.

Build the Utilization Layer¶

For automation behaviors to be truly regarded as “Apps” the barrier to interaction must be zero. Robotics software thus far has just been the “backend” (algorithms), leaving the “frontend” (HRI) to be custom-developed third party interfaces. This disconnect kills the “App” model; you cannot easily distribute automation logic if the user needs to fiddle on the terminal or require a separate application just to press “Start”.

EMOS solves this by treating the UI as a derivative function of the automation recipe. In EMOS, the recipe is the single source of truth. Defining a logic schema automatically generates the control interface. When a user deploys a “Parking Patrol” recipe, for example, they instantly receive a bespoke UI with video feeds, path configurations, and controls.

It is crucial to distinguish this from commercial visualization platforms like Foxglove or OSS tools like Rviz. While those tools excel at passive inspection and engineering debugging, EMOS generates active control surfaces designed for runtime interaction and meant for actually using the robot.

Solve “Solved” Problems¶

With a plethora of demos coming out for ML based manipulation, one might be tempted to believe that navigation is a solved problem. It isn’t.

Traditional stacks which work rather well in structured environments (e.g. autonomous driving) fail in the dynamic, unstructured environments where general-purpose robots will have to operate. In robotics, the most widely used navigation framework (Nav2) is a collection of control plugins which are CPU bound with limited adaptability defined in a behavior tree with a declarative API. This is why we built Kompass in EMOS; a highly adaptive navigation system on GPGPU primitives.

Kompass covers 4 modes of navigation of an agent, point-to-point navigation, following a path, following an object/person and intelligently resting in place. Similarly it covers all motion models for each navigation mode. One can easily see that traditionally a purpose built robot, usually employs one of these navigation modes and a general-purpose robot would have to employ all of them based on its task.

Kompass utilizes the discrete and integrated GPUs widely available on robotic platforms for orders of magnitude faster calculations (of course, without any vendor lock-in). And it does this while being purpose-built for adaptability allowing it to be highly configurable in response to stimuli (events) generated from ML model outputs or the robots internal state.

2. The “Picks-and-Shovels” Play: Infrastructure for the Physical AI Gold Rush¶

Another way to look at EMOS is as an automation building platform. This puts it in the same category as digital agent building frameworks and tools that utilize them. And while the spotlight has recently shifted from just training bigger models to the agentic infrastructure graphs required to orchestrate them, we have been architecting this solution since day one. However, unlike digital agent frameworks, EMOS provides the runtime primitives for building agents that can comprehend, navigate and manipulate the physical world while adapting their behavior at runtime.

While agent building software for the physical world is technically much more challenging than the digital one, one can can still draw certain strategic parallels. Therefore, our strategy is distinct from the many “AI Labs” currently raising capital. We are not trying to win the race for the best Robotic Foundation Model (a poorly defined concept as of yet, as most work is on manipulation); we are building the platform that any effective foundation model will need to function in the physical world.

Benefit from ML Innovation¶

There is plenty of interesting work utilizing LLMs and VLMs for high-level reasoning and planning. Whether the future of direct “robot action” belongs to next-token prediction models (e.g., Pi, Groot, SmolVLA) or latent-space trajectory forecasting (e.g., V-JEPA), EMOS is uniquely positioned to benefit. As these models evolve through scaling or architectural innovation, our platform provides the environment for application specific utilization of these models as part of a complete system.

Currently we believe that Task-Specific Models will dominate over General Purpose Action Models for the foreseeable future. Unlike the digital world, “Internet-scale” data for physical robot actions does not yet exist, making out-of-domain generalization and cross-embodiment a persistent hurdle. Furthermore, current training data assumes a (fairly) static environment for manipulation tasks, which is also why unstructured navigation is a harder problem to solve with ML.

Real-world robotic intelligence will likely not be a single monolithic model, but a symphony of specialized models and control algorithms orchestrated together. EMOS is the only platform built explicitly to orchestrate this symphony.

Capture the “Access Network”¶

EMOS is oriented towards end-user applications, which means that its design choices and development direction optimize for deployment in real-world scenarios. By focusing on software that end-users actually touch (Recipes and their UI), we capture the “access network” for robotics.

EMOS enables the utility of robots, establishing a sticky ecosystem suitable for general-purpose robots. This is why we are focused on getting EMOS to its intended end-users and finding an actual commercial model with which this can be done, as explained in the sections below.

Commercial Terms & Licensing Philosophy¶

The economic model of EMOS is designed to reflect our core thesis: Software should be a standardized asset, not a custom bottleneck. To achieve this, we are following the standard OSS model decouples the software’s availability from its commercial utilization.

1. The Open-Source Core (MIT License)¶

EMOS and its primary components are open-source under the MIT License. This is a deliberate strategic choice driven by the motivation to achieve Ecosystem Velocity, we want open access to accelerate the creation of the “Access Network”. The more developers/AI agents building Recipes on the MIT licensed core, the faster the EMOS standard is established.

It also provides the added advantage of Security through Transparency, something that would get increasingly important as general-purpose robots are deployed in more applications. With increasing regional compliance and sovereign data standards (especially in the EU), an open-source core allows for third-party auditing and ensures that “source” of automation is not a black box.

OSS users can get support through github issues/discussion and more interactively through our Discord server.

2. Value-Based Commercial Licensing¶

While the code is open, Commercial Support and Enterprise Readiness are sold as professional licenses. We follow a Value-Based Pricing model, where the cost of the software license is proportional to the hardware’s capability and market value.

Currently, we offer three tiers of licenses, typically priced between 15% and 30% of the hardware’s MSRP. This pricing reflects the transformation EMOS provides: without it, the hardware is a remote-controlled tool; with it, the hardware can be turned into an autonomous agent.

Wholesale & Retail Structure (Template Case: InMotion Robotic)¶

The following pricing structure is based on our active agreement with InMotion Robotic GMBH for the Deep Robotics Lite3 and M20 platforms. We expect to follow this identical template, i.e scaling license costs relative to hardware MSRP, for future robot models and distributor partnerships.

Item Code |

Platform |

License Tier |

Distributor Cost (25% Disc.) |

MSRP (Retail) |

|---|---|---|---|---|

L3-EXP |

Lite3 |

Expert |

€900 |

€1,200 |

L3-PRO |

Lite3 |

Pro |

€1,835 |

€2,450 |

M20-EXP |

M20 |

Expert |

€2,500 |

€3,330 |

M20-PRO |

M20 |

Pro |

€6,150 |

€8,200 |

License Types & Feature Sets¶

We categorize our commercial offerings into three tiers, each designed to remove specific friction points for different actors in the ecosystem:

Expert License

Designed for R&D labs and single-robot prototyping. This tier lowers the barrier to entry, granting a perpetual commercial license, full access to the EMOS automation recipes library (“The App Store”), and 6 months of professional support. It ensures that developers have the tools and backing to build their first applications immediately.

Pro License

Designed for commercial service providers and fleets. This tier adds development of integration hooks needed for production environments (which in EMOS translates to

Actiondefinitions) and a Deployment Optimization Report. This report is a formalized technical document validating the robot’s ML infrastructure and tuning parameters, to facilitate deployment design work. It includes 12 months of professional support.Enterprise License

Designed for massive-scale infrastructure (possibly 10+ units). These licenses are priced on a custom project basis and include bespoke Service Level Agreements (SLAs).

4. Key Fulfillment & Payment Terms¶

Key-Triggered Invoicing: The issuance of a License Key immediately generates a commercial invoice. This ensures that the distributor can provide the software at the exact moment of the hardware sale.

Support Commencement: To protect distributor inventory, the 6/12-month support clock does not start until the end-user activates the key on a physical robot.

Liability & Compliance: Every activation is tied to the EMOS EULA, ensuring that the end-user accepts the operational responsibilities of deploying an autonomous agent.

3. Professional Service Add-ons¶

For customers requiring additional deployment support, we provide add-on services. These add-ons serve two distinct strategic purposes: enablement (overcoming technical inertia) and ecosystem capabilities (e.g. operational data collection and analysis). For details check the agreement with InMotion

Our Relationship with Actors in the Value Chain¶

This section explains our perspective and relationship with different actors of the robotics ecosystem. This is based on the current market dynamics and these relationships will evolve as the market for general-purpose robots develop.

1. End-Users / Robot Managers¶

Being our main customer, our relationship with the end-user is direct and fundamental. In the legacy model, with robots performing purpose built automation as “tools”, changing a robot’s behavior requires manufacturer intervention or expensive consultants. This situation is intractable when we consider a robot as a general-purpose “platform”, that can potentially be deployed in many different applications.

Our approach is to commoditize that automation logic. We treat the end-user as the owner of the automation, not just a passive operator. By providing a high-level Python API and pre-built “Recipes,” we enable users to modify workflows in minutes. This creates a powerful feedback loop for us: the end-users tell us which real-world complexities (building structures, detection targets, human interaction etc.) are actually breaking their workflows, and we build EMOS primitives that solve these specific “edge cases” natively. We aim to make this experience completely frictionless as explained in the EMOS roadmap.

It is important to understand that currently, the end-user market for autonomous general-purpose robots is mostly theoretical (outside of research institutes, field usage (if any) is teleoperated). We see ourselves as one of the players who will develop this market in the future. We believe, automation demand will come from companies and use-cases which were not considered to be automatable (see case study below). End-users would require plenty of hand holding (and dragging by the feet, if necessary) at the start. This includes rapid user-feedback/development cycles to improve their experience. Because despite clear economic incentives, the middle tier “robot manager” capacity would take some time to develop; this makes customer service paramount, as well as generally approaching end-users by “doing things that do not scale”.

Case Study ESA Security Solutions, Greece¶

ESA Security Solutions, a premier private security provider with over 4,500 employees, represents the leading edge of the transition from “manned guarding” to “agentic security.” By purchasing an EMOS Expert license for their DeepRobotics Lite3 LiDAR robot, they moved beyond the limitations of purpose-built single-function hardware. This was our first sale and was executed through InMotion Robotic (see case study below).

As a service provider, ESA manages a diverse portfolio of deployment scenarios, ranging from parking enforcement and perimeter fence integrity to guest reception and building lock-up inspections. In a traditional robotics model, each of these tasks would require a separate software project, custom-tuned for every new client site. With EMOS, ESA’s own team, including one Python developer, now builds these automation behaviors as modular Recipes (Apps).

Their first production recipe focuses on an automated parking lot inspection: the robot follows a pre-recorded path to verify that vehicles are correctly positioned in designated spots. As deployments scale, they intend to build a fleet with Lite3 and M20 models.

This sale provided us with critical strategic insight: even when higher management is technically sophisticated (ESA’s C-level leadership are all engineers), there is a significant initial “inertia” when moving from teleoperation to autonomous agent building. Hand-holding at the start is a necessity, not an option. In follow up, we significantly lowered the barrier to entry by:

Simplifying Spatial Mapping: We added map and path-recording workflows directly into EMOS, allowing non-technical users to record a static environment and a desired patrol route simply by walking the robot through a new space.

Active Control Surfaces: We enriched our interaction frontend web-components to move beyond passive data viewing. The frontend web-components real-time provide task control and visual feedback that is easily embedded into their existing third-party security management systems.

The aim of EMOS is to empower ESA to own their logic and to prove that with the right orchestration layer, “robot manager” capacity can be built from within organizations that were traditionally out of scope for automation, before general-purpose robots came along. Even so, a lot more work needs to be done to make this technology transition seamless.

2. Distributors and Integrators¶

If end-users own the use-case, distributors and integrators own the “last mile” of the sales pipeline. While for traditional robots (“tools”), integrators acted as middle-men who can often provide value-addition through custom (mostly front-end) software, EMOS shifts their role toward high-value solution architecture.

For general-purpose robots, their role is currently limited to that of a distributor. For now, these distributors are the primary sales channel for both the OEMs and us. We approach these actors as Value-Added Resellers of the EMOS platform. Since EMOS is bundled on the robot, the distributors can sell its professional licenses as a “necessary” value-added offering that makes the robot ready for ”second development”. They can also earn distributor commission on allied services and recurring support contracts done through them.

If a distributor wants to work as a solution integrator, EMOS acts as their SDK for the physical world. Instead of resource-intensive custom development, they can focus 100% of their effort on enterprise integration; connecting robot triggers to ERPs, BMSs etc. By reducing their engineering cost we allow smaller teams to move significantly faster at deploying solutions, which in turn accelerates our deployment footprint. I.e, we expect this role to get “thinner” with time.

Case Study InMotion Robotic GMBH¶

InMotion Robotic acts as the Master Distributor for DeepRobotics in Europe. They represent the ideal profile of our “Value-Added Reseller” partner: a company with hardware logistics capabilities with a need to make the robot hardware ready for actual utility.

While InMotion handles import, certification, and hardware maintenance, selling “bare-metal” robots to non-technical end-users (like security firms or industrial inspection clients) can require long sales cycles for establishing actual utility. We have structured a formal distribution agreement that operationalize the EMOS value add:

Ready-to-Deploy Bundle: EMOS is pre-installed on all M20 and Lite3 units shipped by InMotion in the European market. Instead of selling the robot and the software as separate line items, InMotion presents the robot as an “EMOS-Powered” solution in their marketing materials and Spec Sheets. This lowers the cognitive load for the buyer, who sees a complete functional system.

Margin-Driven Sales: We applied the Value-Based Pricing model defined in our Pricing Agreement with InMotion. By offering a 25% distributor margin on software licenses, we incentivize the upselling of Pro and Enterprise licenses on every hardware unit.

Support demarcation: We have clearly delineated responsibility. InMotion handles the physical hardware warranty and “Level 1” setup. We handle “Level 2 & 3” software support and recipe logic.

Through InMotion, we have secured a direct sales channel to the European market. While most people still struggle to articulate the requirement for a high-level automation development layer (its all very new after all and all they have seen till now are flimsy SDKs and shoddy documentation from OEMs), from all the feedback gathered from other distributors, the need for EMOS is quite clear.

Similar formal arrangements with the following distributors are in the pipeline:

Generation Robots: Distributors for Booster Robotics and sub-distributor for DeepRobotics.

Innov8: Master distributors for Unitree.

3. OEMs (Hardware manufacturers)¶

The hardware landscape of general-purpose robots has recently undergone a massive structural shift. It is shifting from a vertical integration legacy model (e.g., Boston Dynamics) to commoditized, cost-leading manufacturing. The old approach, where the manufacturer built everything from the robot chassis to the high-level perception logic, while necessary at the research stage, resulted in a closed ecosystem with a price tag that is prohibitive for most real-world commercial use-cases.

Today, hardware commoditization is well underway. Chinese manufacturers, particularly the aggressive “cost-leading” players like Unitree, DeepRobotics and a plethora of others have utilized public policy subsidies to scale up manufacturing and distribution. Their software focus thus far has remained the difficult problem of locomotion control, the fundamental ability to keep a humanoid or quadruped balanced on uneven terrain. As for the rest, they typically ship these machines with “barebones” software: a motion controller, basic teleoperation, and a proprietary SDK that merely exposes the robot’s raw interfaces in standard middleware.

For these manufacturers, expanding into high-level software orchestration is not a simple matter of hiring a few more engineers; it is a structural hurdle. They do not want to be caught in a talent war they cannot afford and without a standardized runtime, these OEMs inevitably fall into a “consulting trap”, where their limited software resources are squandered on one-off custom requirements rather than building a scalable platform. This creates a permanent software bottleneck, expecting the customer to manually patch together a working system from a fragmented collection of open-source and custom components to achieve high-level tasks like autonomous navigation or semantic understanding. This “patchwork” approach is impractical, expensive, and remains the primary barrier to serious adoption.

There is also the non-trivial pressure of regional compliance. In Europe, for example, we see a clear and growing mandate for European-made software to satisfy stringent security and sovereign data standards. From our experience these constraints are well understood by most players and as the inevitable consolidation happens for subsidy inflated production, these constraints would become more pronounced.

Our strategy with OEMs is one of Incentivized Standardization. We believe manufacturers should focus on their core competency: the physical machine and its locomotive stability. With a single EMOS Hardware Abstraction Layer (HAL) Plugin (developed using their SDK), an OEM instantly unlocks the entire EMOS ecosystem for their hardware.

We transform the OEM’s capital-heavy machine into a “liquid” platform asset. This ensures that their unit is ready for what they like to call second-development, out of the box, allowing any EMOS Recipe written by an end-user or integrator to run on their specific chassis without custom code.

Case Study DeepRobotics - Hangzhou Yunshenchu Technology Co., Ltd¶

DeepRobotics is a tier-one player in the Chinese robotics landscape and the one of the first companies in China to achieve mass production of industrial-grade quadruped robots. They are our oldest hardware partner, having signed a comprehensive Software Service Agreement in 2024. This relationship has been foundational in proving EMOS capabilities on general-purpose hardware.

While our distributor partnerships (like InMotion) focus on “bundling,” our ultimate goal with an OEM of this caliber is Factory Integration, shipping EMOS as the default OS on the robot right out of the box. However, achieving this within large hardware-centric organizations presents specific challenges. DeepRobotics, like many in its cohort, has established massive capacity driven by industrial subsidies and a focus on hardware durability and locomotive control. Consequently, their internal structure is often siloed; product teams are heavily incentivized to ship new hardware (SKUs), often viewing third-party software as a secondary concern or a loss of control.

Our strategy here is to leverage the internal pressure from their own sales divisions. While product managers may be protective of their roadmaps, the frontline sales teams acutely feel the pain of lost deals when customers realize the basic nature of the default SDK (they try their best to offload this pain to their distributors). By demonstrating to the sales leadership that EMOS converts hardware interest into signed contracts, we are gradually aligning their internal incentives to accept EMOS not as a competitor to their internal software capacity, but as the necessary utilization layer, beyond their specialization, that in the end helps moves inventory.

We are currently replicating this engagement model with a pipeline of other significant hardware players (which are in various stages of development):

Booster Robotics: A Beijing based humanoid robotics startup focused on full-size and educational humanoid platforms, including the Booster T1 and K1 robots. Their systems are primarily targeted at research, education, and developer communities.

RealMan Robotics: A leading Chinese manufacturer of ultra-lightweight robotic arms that also offers modular humanoids with wheeled base targeting service robotics, and manipulation-centric applications.

High Torque Robotics: A robotics company known for high-density joint actuators and modular humanoid platforms, including the Mini Pi+ desktop humanoid. Their robots target research, education, and rapid prototyping of high-payload humanoid systems.

Zhejiang Humanoid (Zhejiang Humanoid Robot Innovation Center): A Zhejiang based humanoid robot manufacturer developing full-size bipedal and wheeled humanoid robots such as the NAVIAI series, aimed at industrial, logistics, and real-world deployment scenarios.

4. Allied Actors¶

Beyond the direct value chain, we interact with a specialized group of allied actors whose innovations are utilized and amplified in EMOS.

Middleware Ecosystem¶

EMOS primitives are built on the industry standard, ROS2, ensuring compatibility with the vast existing ecosystem of drivers and tools. We actively participate in the Open Robotics (OSRF) community to maintain this alignment. However, we are not dogmatic about the underlying transport layer. To prepare for a future demanding higher memory safety and concurrency, compatibility with community efforts like ROSZ (a Rust-based, ROS2-compliant middleware) is in our pipeline.

Model Inference Providers¶

The ML graph infrastructure in EMOS (primarily part of EmbodiedAgents) is agnostic to the source of intelligence. It bundles light footprint local models for certain components and supports OpenAI-compatible Cloud APIs as well as local inference engines like Ollama and vLLM. Crucially, we are bridging the gap between research-focused policy learning and its utility on actual humanoids. We have collaborated with the Hugging Face LeRobot team to integrate the state-of-the-art policy models from LeRobot. The latest version of EmbodiedAgents includes an async action-chunking client that treats LeRobot as a remotely deployed Policy Server. This allows LeRobot models to drive ROS2 compatible manipulators, a pipeline that was previously disjointed.

Data Collection and Management¶

A general-purpose agent generates a massive volume of raw sensor data, most of which is redundant. To scale, one requires a way to surgically extract high-value signal from the noise to refine ML models, validate system behaviors and satisfy compliance requirements . We have established a partnership with Heex Technologies, an emerging leader in robotics data management. By integrating their event-triggered extraction platform with EMOS, we enable users to automate event-driven data collection, without the overhead of bulk logging. This data can be visualized and manipulated on the partners platform. This allows EMOS end-users to maintain high-fidelity feedback loops, ensuring that every real-world encounter directly contributes to the continuous improvement of the robot’s intelligence.

Simulation Developers¶

We are working with high-fidelity simulators (Webots, IsaacSim) and asset providers like Lightwheel. Our specific focus here is different from the “Sim-to-Real” RL training crowd. We view the simulator as a Recipe Validator. The goal is to verify the logic of an entire automation Recipe in a digital twin before physical execution. This is a highly non-trivial problem for realistic, unstructured environments, and we aim to co-develop workflows with these partners to make “Digital Twin verification” a standard CI/CD step for physical automation.

Compute Hardware (NPU/GPU Vendors)¶

Because EMOS (specifically our navigation stack, Kompass) is built on GPGPU primitives, we have a symbiotic relationship with silicon providers. Our goal is to maximize the utilization of edge-compute hardware not just for ML workloads, but navigation as well. While NVIDIA Jetson is the current dominant platform, we see a multi-provider future. We are actively collaborating with AMD to optimize for their upcoming Strix Halo platform and with Rockchip for cost-sensitive deployments, ensuring EMOS remains performant regardless of the underlying silicon. Similar collaborations are in the pipeline with BlackSesame Technologies and Chengdu Aplux Intelligence. See benchmarking results for Kompass on different platforms here.