Product: EMOS¶

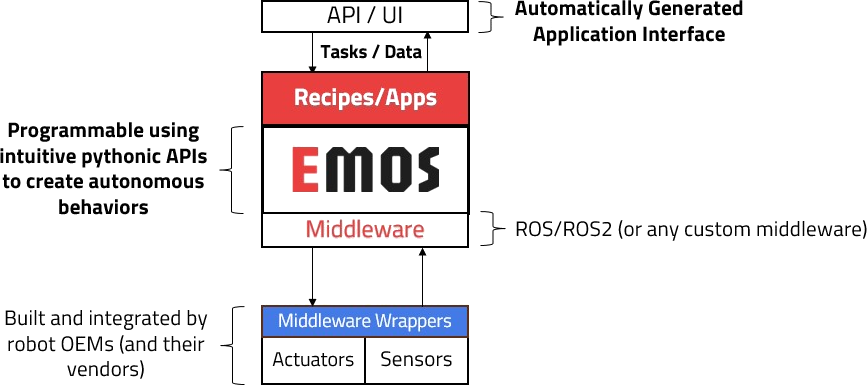

The Embodied OS or EMOS is the unified software layer that transforms quadrupeds, humanoids, and other general purpose mobile robots into Physical AI Agents. Just as Android standardized the smartphone hardware market, EMOS provides a bundled, hardware-agnostic runtime that allows robots to see, think, move, and adapt in the real world.

EMOS provides system level abstractions for building and orchestrating intelligent behavior in robots. It is the software layer that is required for transitioning from robot as a tool (to perform a specific task throughout its lifecycle), to robot as a platform (that can perform in different application scenerios, i.e. fulfilling the promise of general purpose robotics.) It is primarily targetted towards end-users of robots so that they can utilize and customize pre-built automation routines (called recipes) or conveniently program their automation routines themselves.

EMOS allows end-users or other actors in the valuechain (integrators or OEM teams) to create rich autonomous capabilities using its ridiculously simple Python API¶

The product in few words

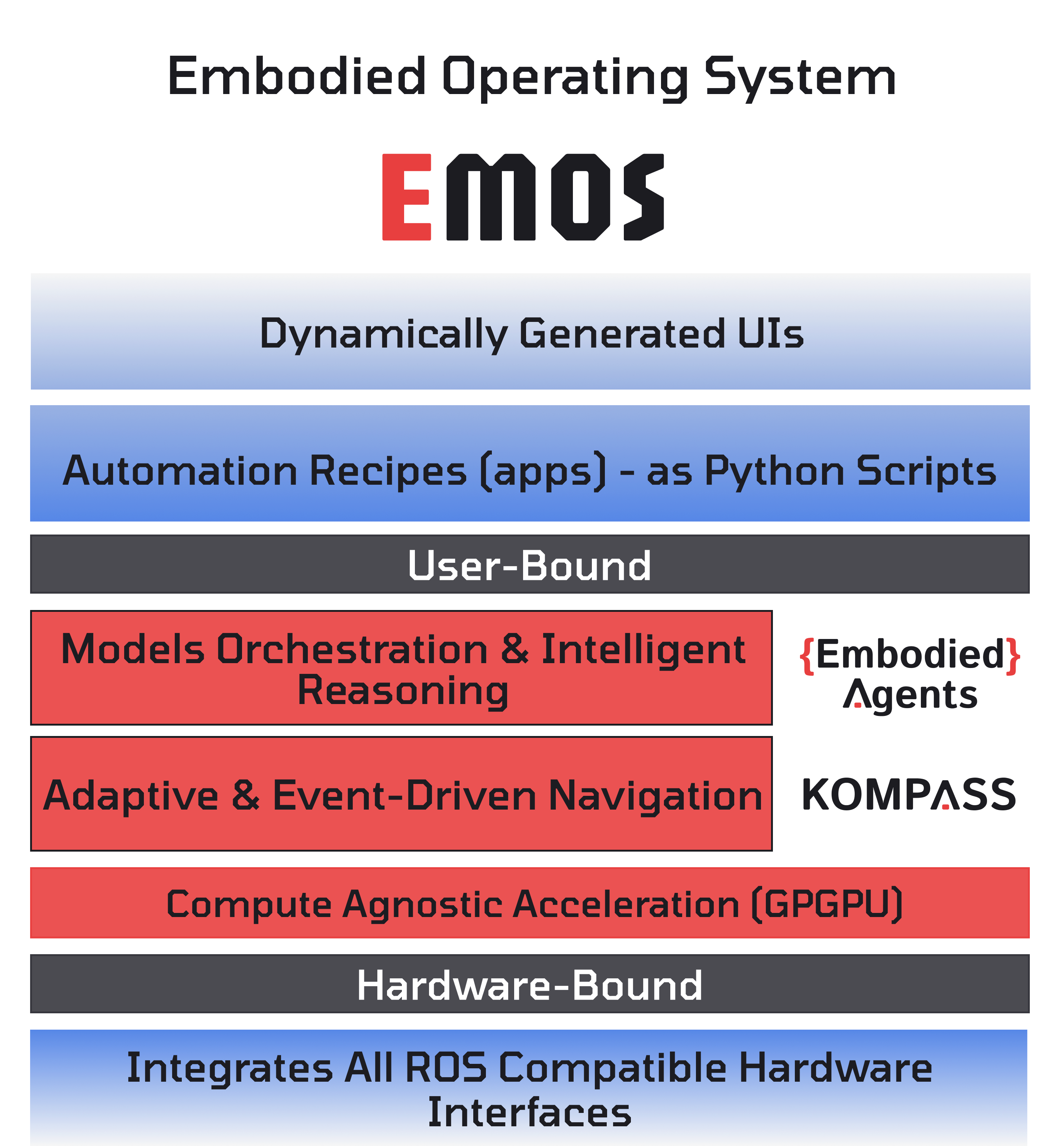

Our product is EMOS (The Embodied OS), the industry’s first unified runtime and ecosystem for Physical AI. Think of EMOS as the Android for Robotics: a hardware-agnostic platform that decouples the robot’s “Body” from its “Mind” by providing:

The Runtime: A bundled software stack that combines Kompass (our GPGPU-accelerated navigation layer) with EmbodiedAgents (our cognitive reasoning and manipulation layer). It turns any pile of motors and sensors into an intelligent agent out-of-the-box.

The Platform: A development framework and future Marketplace that allows engineers to write “Recipes” (Apps) once using a simple Python API, and deploy them across any robot—from quadrupeds to humanoids—without rewriting code.

What is a Physical AI Agent?¶

A Physical AI Agent is more than just a machine executing serialized instructions, and distinct from a disembodied digital agent like coding or browser use agents. It combines intelligence and adaptivity with embodiment. EMOS makes this possible out-of-the-box:

See & Understand: Interpret the world with multi-modal ML models.

Think & Remember: Use spatio-temporal semantic memory and contextual reasoning.

Move & Manipulate: Execute GPU-powered navigation and VLA-based manipulation in dynamic environments.

Adapt in Real Time: Reconfigure logic at runtime based on tasks, environmental events and internal state.

What’s Inside EMOS?¶

EMOS is built on open-source, publicly developed core components that work in tandem:

The Embodied Operating System¶

Component |

Layer |

Function |

|---|---|---|

The Intelligence Layer |

The orchestration framework for building arbitrary agentic graphs of ML models, along with heirarchical spatio-temporal memory, information routing and event-driven adaptive reconfiguration |

|

The Navigation Layer |

The event-driven navigation stack responsible for GPU-powered planning and control for real-world mobility on any hardware. |

|

The Architecture Layer |

The meta-framework that underpins both EmbodiedAgents and Kompass for providing event-driven system design primitives and a beautifully imperative system specification and launch API. |

Transforming Hardware into Intelligent Agents: What does EMOS add to robots?¶

EMOS unlocks the full potential of robotic hardware at both functional and resource utilization levels.

1. From Custom Code to Universal “Recipes” (Apps)¶

EMOS replaces brittle, robot and task specific software “projects” (which are a patchwork of launch files for ROS packages and custom code), with “Recipes”: reusable, hardware-agnostic application packages.

One Robot, Multiple Tasks, Multiple Environments: The same robot can run multiple recipes, each recipe can be specific to a different application scenerio or define a particular set of constituive parameters for the same application performed in different environments.

Universal Compatibility: A recipe written for one robot, runs identically on other robots. Example, a “Security Patrol” recipe defined for a wheeled AMR would work seemlessly on a quadruped (given a similar sensor suite), with EMOS handling the specific kinematics and action commands.

2. Real-World Event-Driven Autonomy¶

While current robots are limited to controlled environments that are simple to navigate, EMOS enables dynamic behavior switching based on environmental context which is the basis for adaptivity required for future general purpose robot deployments.

Granular Event Bindings: Events can be defined on any information stream, from hardware diagnostics to high-level ML outputs. Events can trigger component level actions (taking a picture using the Vision component), infrastructure level actions (reconfiguring or restarting a component) or arbitrary actions defined in the recipe (sending notifications via an API call).

Imperative Fallback Logic: Developers can write recipes that treat failure as a control flow state rather than a system crash. By defining arbitrary functions for recovery, such as switching a navigation controller upon encountering humans or switching to a lightweight local ML model from larger cloud model based on communication loss. EMOS guarantees operational continuity in chaotic, real-world conditions.

3. Dynamic Interaction UI¶

In robotics software, automation is considered backend logic. Front-ends are application specific custom developed projects. With EMOS recipes, an automation behavior becomes an “App”, and the front-end is auto-generated by the recipe itself for real-time monitoring and human-robot interaction.

Schema-Driven Web Dashboards: EMOS automatically renders a fully functional, web-based interface directly from the recipe definition. This dashboard consolidates real-time telemetry, structured logging, and runtime configuration settings into a single view. The view itself is easily customizable.

Composable Integration: Built on standard web components, the generated UI elements are modular and portable by design. This allows individual widgets (such as a video feed, a “Start” button, or a map view) to be easily embedded into third-party external systems, such as enterprise fleet management software or existing command center portals, ensuring seamless interoperability.

4. Optimized Edge Compute Utilization¶

EMOS maximizes robot hardware utilization, allowing recipes to use all compute resources available.

Hybrid AI Inference: EMOS enables seamless switching between edge and cloud intelligence. Critical, low-latency perception models can be automatically deployed for on-chip NPUs to ensure fast reaction times, while complex reasoning tasks can be routed to the cloud. This hybrid approach balances cost, latency, and capability in real world deployments.

Hardware-Agnostic GPU Navigation: Unlike traditional stacks that bottleneck the CPU with heavy geometric calculations, EMOS includes the industry’s only navigation engine built to utilize the GPU for compute heavy navigation tasks. It utilizes process level parallelization for CPU only platforms.

Developer Experience¶

The primary goal of EMOS is commodification of robotics software by decoupling the “what” (application logic written in recipes) from the “how” (underlying perception and control). This abstraction allows different actors in the value chain to do robotic software development at the level of abstraction most relevant to their goals, whether they are operational end-users, solution integrators, or hardware manufacturers.

Developer Categories¶

Because EMOS is built with a simple pythonic API, it removes the steep learning curve typically associated with ROS or proprietary vendor stacks. Its ultimate goal is to empower end-users to take charge of their own automation needs, thus fulfilling the promise of a general purpose robot platform that does not require manufacturer dependence or third-party expertise for making it operational. EMOS empowers the following three categories in different ways:

The End-User / Robot Manager¶

Perspective: These are use-case owners rather than robotics engineers. They require robots to be effective agents for their tasks, not research projects. Design choices in EMOS primarily cater to their perspective.

Experience: They primarily utilize pre-built Recipes or make minor configurations to existing ones. For those with basic scripting skills, the high-level Python API allows them to string together complex behaviors in minutes, focusing purely on business logic without worrying about. In future releases (2026) they will additionally have a GUI based agent builder, along with agentic building through plain text instructions.

Distributors / Integrators¶

Perspective: These are distributors (more relevant in case of general purpose robots) tasked with selling the robot to the end-users, or solution consultancies/freelance engineers tasked with fitting the robot into a larger enterprise ecosystem. They care about interoperability, extensibility, and custom logic to fit the end-users requirements.

Experience: EMOS provides them with a robust “glue” layer. They can utilize the Event-Driven architecture to create custom workflows from robot triggers to external APIs (like a Building Management System). For them, EMOS is an SDK that handles the physical world, allowing them to focus on the digital integration in the recipes they build.

OEM Teams¶

Perspective: These are the hardware manufacturers building the robot chassis. Their goal is to ensure their hardware is actually utilized by customers and performs optimally.

Experience: Instead of maintaining a fragmented software stack, they focus on developing EMOS HAL (Hardware Abstraction Layer) Plugins. Since EMOS can utilize any underlying middleware (most popular one being ROS), this work is minimal and usually just involves making any vendor specific interfaces available in EMOS primitives. By writing this simple plugin once, they instantly unlock the entire EMOS ecosystem for their hardware, ensuring that unit is ready for, what they call, “second-development” and any Recipe written by an end-user or integrator can run flawlessly on their specific chassis. (Currently, we are growing the plugin library ourselves.)

Recipe Examples¶

Recipes in EMOS are not just scripts; they are complete agentic workflows. By combining capabilities in Kompass (Navigation) with EmbodiedAgents (Intelligence), developers can build sophisticated, adaptive behaviors in just a few lines of Python. What makes EMOS unique is not just the fact that these behaviors (apps) can be deployed on general-purpose robots, but that they are available simultaneously on the same robot and can be switched on/off as per the task.

Below are four examples selected from our documentation that showcase this versatility.

1. The General Purpose Assistant¶

Use Case: A general-purpose helper robot in a manufacturing fab-lab. The robot acts as a hands-free assistant for technicians whose hands are busy with tools. It must intelligently distinguish between three types of verbal requests:

1. General Knowledge: "What is the standard torque for an M6 bolt?"

2. Visual Introspection: "Is the safety guard on the bandsaw currently open?"

3. Navigation: "Go to the tool storage area."

The Recipe: This is a sophisticated graph architecture. It uses a Semantic Memory component to store its instrospective observations and a Semantic Router to analyze the user’s intent. Based on the request, it routes the command to:

Kompass: For navigation requests (utilizing a semantic map).

VLM: For visual introspection of the environment.

LLM: For general knowledge queries.

# Define the routing logic based on semantic meaning, not just keywords

llm_route = Route(samples=["What is the torque for M6?", "Convert inches to mm"])

mllm_route = Route(samples=["What tool is this?", "Is the safety light on?"])

goto_route = Route(samples=["Go to the CNC machine", "Move to storage"])

# The Semantic Router directs traffic based on intent

router = SemanticRouter(

inputs=[query_topic],

routes=[llm_route, goto_route, mllm_route], # Routes to Chat, Nav, or Vision

default_route=llm_route,

config=router_config

)

2. The Resilient “Always-On” Agent¶

Use Case: A robot is deployed in a remote facility with unstable internet (e.g., an oil rig or a basement archive). It normally uses a powerful cloud-based model (like GPT-5.2 with OpenAI API or Qwen-72B hosted on a compute-heavy server on the local network) for high-intelligence reasoning, but it cannot afford to “freeze” if the connection drops.

The Recipe: This recipe demonstrates Runtime Robustness. The agent is configured with a “Plan A” (Cloud Model) and a “Plan B” (Local Quantized Model). We bind an on_algorithm_fail event to the component; if the cloud API times out or fails, EMOS automatically hot-swaps the underlying model client to the local backup without crashing the application.

# Bind Failures to the Action

# If the cloud API fails (runtime), instantly switch to the local backup model

llm_component.on_algorithm_fail(

action=switch_to_backup,

max_retries=3

)

3. The Self-Recovering Warehouse AMR¶

Use Case: An Autonomous Mobile Robot (AMR) operates in a cluttered warehouse not specifically built for robotic autonomy. Occasionally, it gets cornered or stuck against pallets, causing the path planner to fail. Instead of triggering a “Red Light” requiring a human to manually reset it, the robot should attempt to unblock itself.

The Recipe: This recipe utilizes Event/Action pairs for self-healing. We define specific events for controller_fail and planner_fail. These are linked to a specific move_to_unblock action in the DriveManager, allowing the robot to perform recovery maneuvers automatically when standard navigation algorithms get stuck.

# Define Events/Actions dictionary for self-healing

events_actions = {

# If the emergency stop triggers, restart the planner and back away

event_emergency_stop: [

ComponentActions.restart(component=planner),

unblock_action,

],

# If the controller algorithm fails, attempt unblocking maneuver

event_controller_fail: unblock_action,

}

4. The “Off-Grid” Field Mule¶

Use Case: A surveyor is exploring a new construction site or a disaster relief zone where no map exists. They need the robot to carry specialized equipment and follow them closely. Since the environment is unknown and dynamic, standard map-based planning is impossible.

The Recipe: This recipe relies on the VisionRGBDFollower controller in Kompass. By fusing depth data with visual detection, the robot “locks on” to the human guide and reacts purely to the local geometry. This allows it to navigate safely in unstructured, unmapped environments by maintaining a precise relative vector to the human, effectively acting as a “tethered” mule without requiring GPS or SLAM. Even in mapped environments, the same robot can be made to do point-to-point navigation or following by using command intent based routing from Example 1.

# Setup controller to use Depth + Vision Fusion

controller.inputs(

vision_detections=detections_topic,

depth_camera_info=depth_cam_info_topic

)

controller.algorithm = "VisionRGBDFollower"